How we accidentally DDOS’ed ourselves

Merchants at Unipage have access to an order fulfillment application that shows them incoming orders. Through this application they can easily see order details paired with customer details. To make things even better, merchants get a direct line of communication with their customer through the use of an intuitive interface.

Needless to say - this application is crucial in the order fulfilment flow of our customers.

The pot that boiled the frog

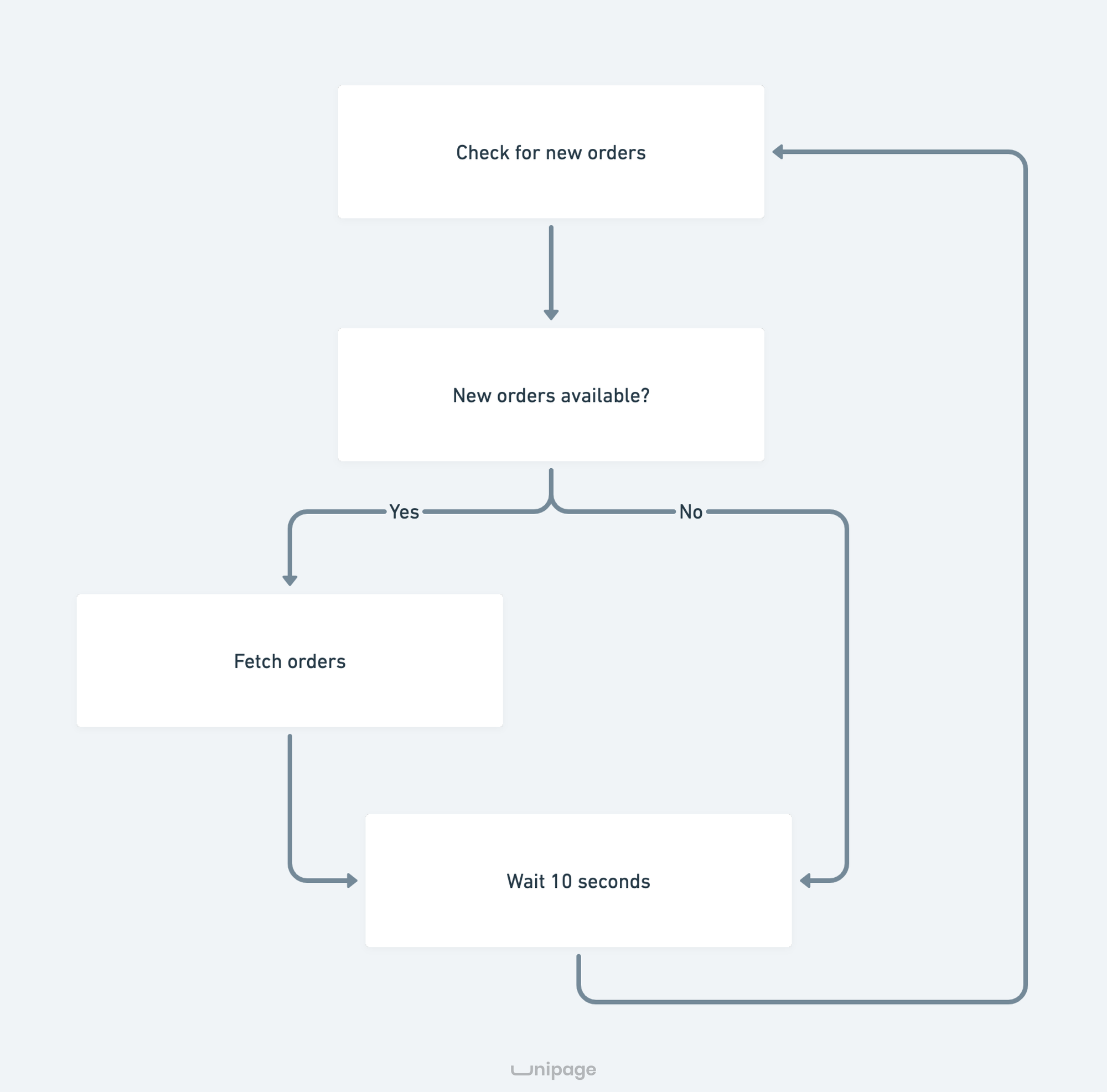

Every 10 seconds, this application would send an HTTP request to an API to check if there are new orders. If needed, the application would do a second HTTP request to fetch all new orders.

The backend would execute a simple query:

SELECT count(id) FROM orders WHERE business_id = ... AND created_at >= ... AND created_at < ...This worked great when we had 40 customers and averaged 100 daily orders, but it turned out this was a ticking timebomb. 💣

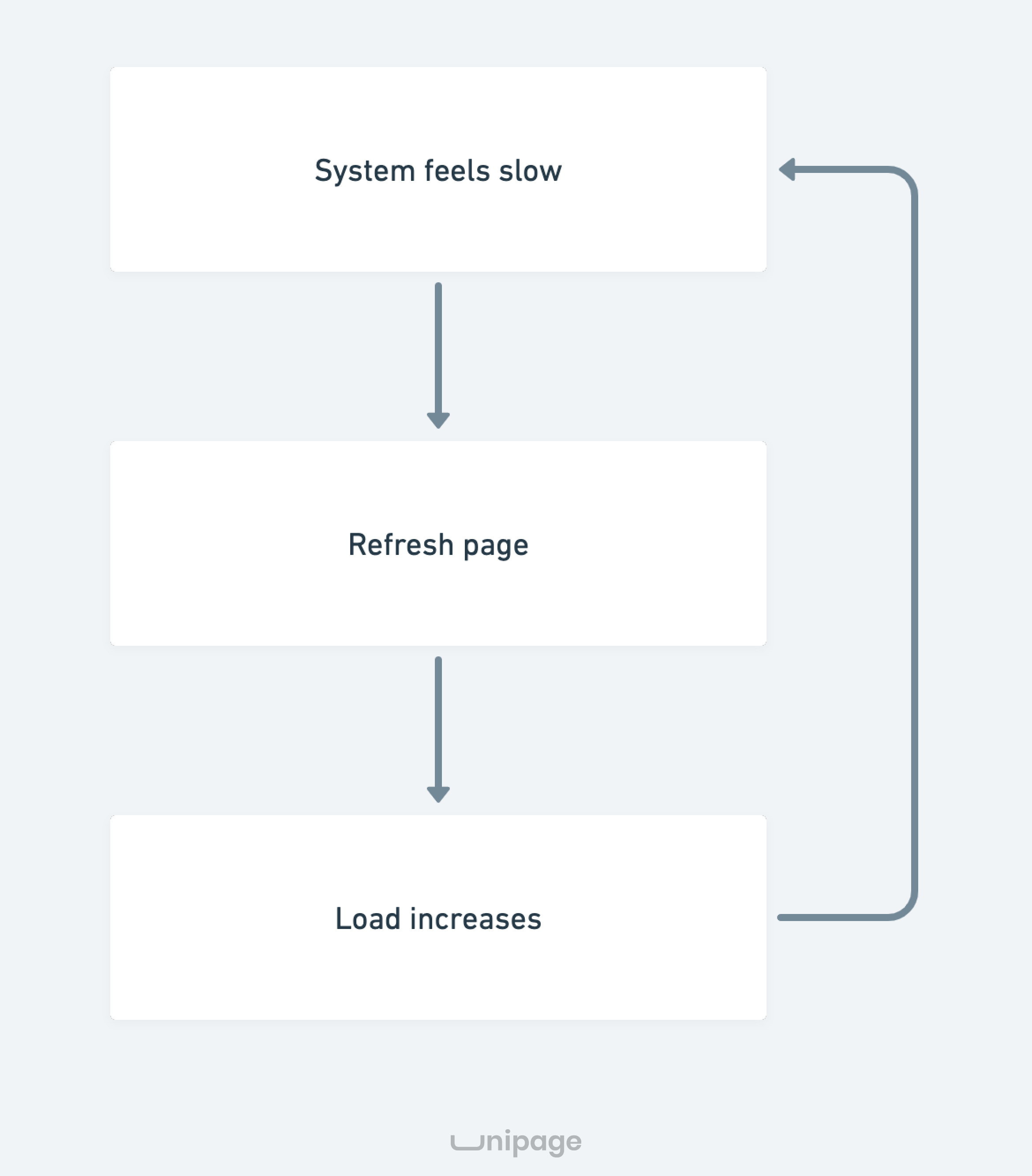

In 2020, our merchants and orders skyrocketed, and they were relying on our systems more than ever. We were still having growing pains at the time, when all of a sudden on a friday evening we got flooded with phonecalls: the system felt very unstable, customers were complaining, orders weren't loading...

A funny thing happens when a system starts failing: people start refreshing their pages in frustration, causing even more load on the system as more queries get executed, causing even more frustration, causing more reloads, ... this snowball effect eventually caused our entire system to go down.

Upon inspecting, it was a miracle this hadn't happened sooner. Allowing 240 merchants (at the time) to execute queries every 10 seconds might not have been the best idea.

To buy ourselves some time, we increased the 10 second interval to 20, halving the amount of queries executed. Since this was only a temporary hack, we had to start working on a sustainable fix ASAP.

Redis to the rescue

Our end-game was to implement websockets, so we could drop the MySQL count queries alltogether. Since this was not a quick fix, I decided to first implement a temporary solution.

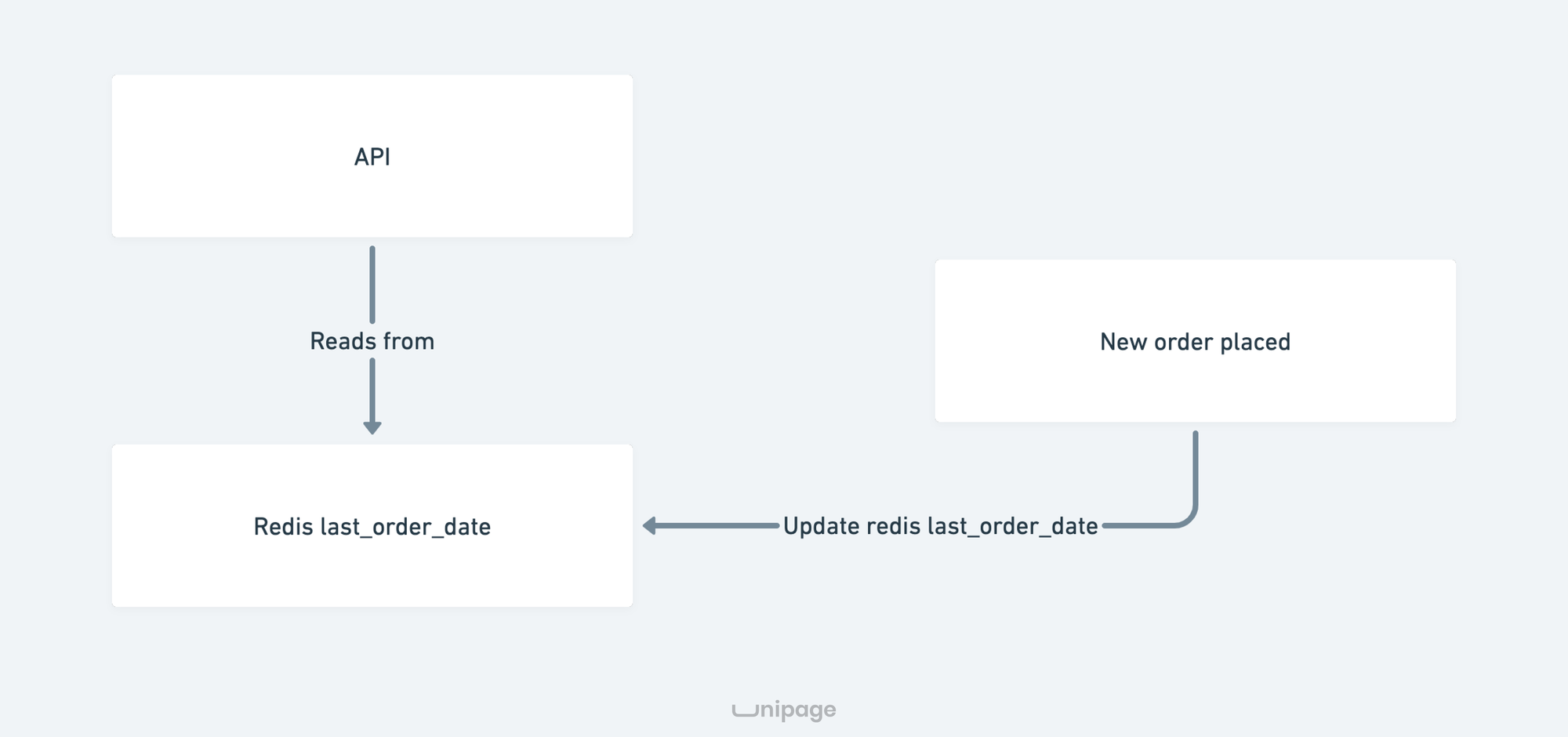

To remove the need for a count query, I started keeping track of the last order date in a Redis cache. Every time a customer placed a new order, I'd update the last_order_date to the current timestamp. The application had to be modified slightly for this change, but overall it was a very low effort change.

The impact of this change was huge and increased the overall performance of our application significantly. Nice side effect: API response time decereased to ~75ms on average.

This change bought us the time we needed to implement websockets the right way.

End-game: Websockets

Since the pressure was off, we had the time to set up our websocket solution using Laravel Echo and Echo Server. When the application becomes active, it opens up a secure websocket connection with the server. Every time an order gets placed, the server would push the order details to the application over the websocket.

This approach allows us to remove the entire overhead of keeping the applications in sync with the database. Instead we now rely 100% on websockets.

Today, over 1200 applications become active daily. Using websockets they keep their orders synchronised with the server without any hickups whatsoever. 🚀

No spam, no sharing to third party. Only you and me.