Implementing an aggressive redis caching strategy

How prepared are you when suddenly 5000 people storm your application?

Unipage is the cheapest and fastest webshop solution for every type of restaurant, with over 1.400 active webshops to date.

In the summer of 2020 Unipage had onboarded a customer that was totally different than our other customers - a camping. During the first summer of the pandemic, the government was scrambling to adapt to the health situation with a plethora of rules.

The camping in question had a swimming pool and had to instate a limit of 300 swimmers a day. The camping used Unipage creatively and sold 300 pool entry tickets every day at 11:00 - a bit of an unconventional use of the system, but at the time we saw no issue.

A slight miscalculation on our end however, was that they had 5000 guests staying who had 1 thing in common: they were uncomfortably warm (it was a hot summer!) and were hoping to gain an entry ticket to the pool.

DDOS'ed by surprise

The situation that ensued caught us by surprise: 5000 people flooding the Unipage shop at 10:59 and effectively DDOS'ing our entire system by constantly refreshing the page until the pool tickets became available. The entire situation caused downtime of ~15 minutes on our entire system, effectively crashing all our other shops as well - not ideal.

Lesson learned

Never underestimate 5000 uncomfortably warm people looking for refreshment 😬

200.000 MySQL queries in a minute

Unipage is a multitenant Laravel application that has been up & running for over 10 years. Before the pandemic, we had ~40 customers and handled 80 orders daily on average. We handled this on a shared cPanel-based VPS and never thought twice about caching.

In the summer of 2020 this number exploded to 400 customers and 1200 daily orders. Our application at the time ran off a single MySQL database (handling read+write) and had no caching mechanism in place.

Every page view ran 40 MySQL queries (products, availability, user info, company info, ...) Multiply this times 5000 and we ended up with 200k MySQL queries in 60 seconds.

Redis to the rescue

Taking a critical look at the data needed to render the page, we came to the conclusion that almost all this data could be cached. The cache would have to be cleared on just a few triggers:

- When a new order is placed

- When product availability changes

- When products get updated (eg. price)

- ...

We now had defined our caching end goal, but implementing a great caching strategy takes time & resources, a luxury we didn't have at the time. To quickly mitigate our problem we cached the entire data needed to render the page for 5 seconds in redis.

This simple trick would trigger a db hit a maximum of 12 times a minute, meaning we reduced our queries from 200k/minute to a mere 480/minute, no matter the amount of visitors.

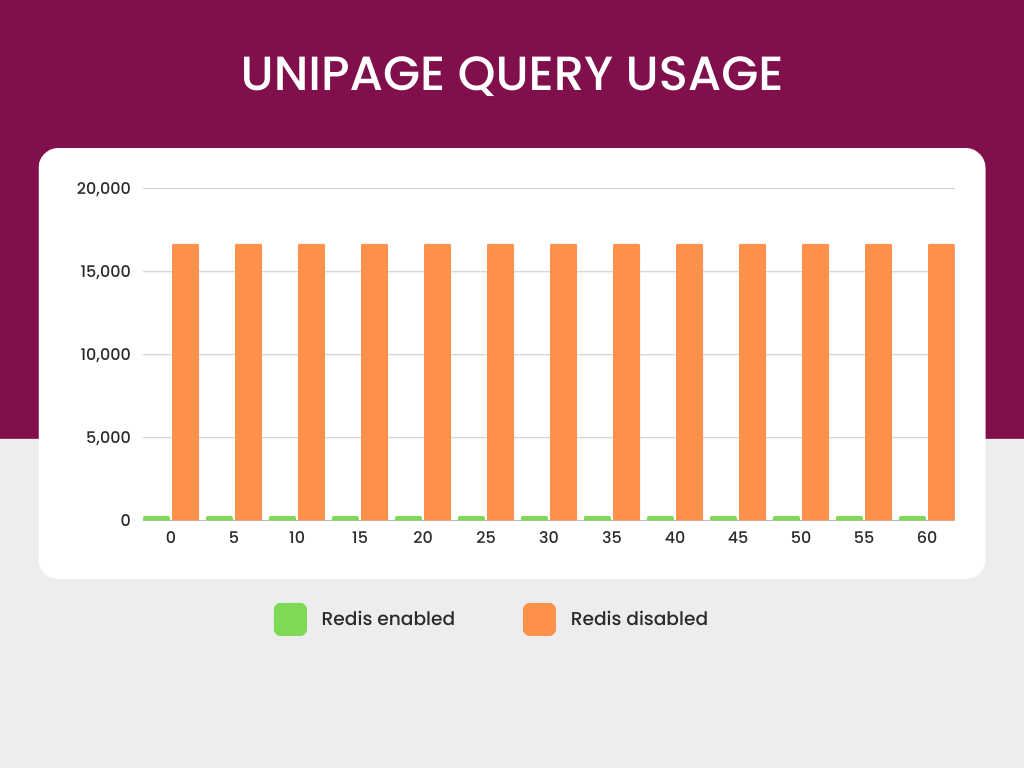

Looking at the graph above, we can safely say putting this measure into place was a leap in the right direction.

99.8% less queries 🚀

From 200.000 queries per minute to 480/minute

While not an ideal solution, implementing this 5-second cache bought us the time we needed to implement the full solution.

3 Lessons learned

Causing just 15 minutes of downtime has huge implications for all our customers, so we took this issue seriously. We took a step back and analysed what happened and how we can prevent this in the future.

Lesson 1: Stick to your niche

This entire situation hadn't happened if we did not allow 'tickets' to be sold on our platform. We're a food ordering platform where scarcity is almost never an issue - supply usually meets demand. Food platforms and ticket platforms share virtually no similarities and our platform was not optimised for the latter.

On the bright side, we're now capable of handling a massive influx of users (ie. a flash sale)!

Lesson 2: Start thinking about caching sooner

I'm not a fan of prematurely optimising applications - especially when you want to ship features fast. Even though this situation was very unique to our platform, we had to suddenly scramble to implement a method to handle the influx of users. In the future, I'll start thinking about caching strategies sooner (and implement them before I need them).

Lesson 3: The weakness of a single database multi-tenant application

Multi-tenant applications come in many flavors. We opted to run everything off a single database, which makes it easy to maintain. The biggest drawback here is that when the db fails, we suddenly cause downtime on all our tenants. We started taking measures against this by implementing a Shopify-like 'pod' architecture. If you want to learn more about this architecture, check out this great talk https://www.infoq.com/presentations/shopify-architecture-flash-sale/

In the end we were able to keep this issue to a single time occurrence, so the damage was limited. The next day, the camping guests were able to book their tickets without crashing our system, and everyone was happy they could take their refreshing dive in the pool 🤿